In DI, it can be challenging to harness the potential of digitalisation at full scale due to installed proprietary software solutions, customised processes, standardised interfaces and mixed technologies. However, at Siemens, this doesn’t mean that we ran a large standardisation program before leveraging the possibilities of data analytics and predictive maintenance in our plants.

To get rubber on the road at large scale, we required an architectural concept which allowed us to develop applications, scale up and transfer solutions from plant to plant, from engineering to shop floor as well as supplier to customer and reuse identified process insights from one application to another. During our LDF program, we used the “North Star” concept in combination with Reference Processes to describe exactly what we are aiming for and which functionalities we needed to benefit us in return. The “North Star” concept is very well known to lean affine people and helps to focus all implementation activities.

The linkage of the digital twin of product, production and performance will increase efficiency of our product lifecycle management process and reduce time to market. While the engineers are designing, a cost model is calculating in real time by using a design-cost relation pattern. This is mainly supported by the high maturity level and interoperability of DI Software solutions based on the PLM data backbone Teamcenter.

Another “North Star” is the full digital visualisation of the complete plant with its production lines and cells. This includes logistic processes for material and tools by “Process Simulate” and “Plant Simulation” to design and optimise all assets in the shop-floor. All data generated during the production process are collected and analysed via artificial intelligence methods to feed them back to the product and production design process for continuous optimisation.

Another example is the full automation of our planning, scheduling and sequencing of production orders to assure the best utilisation of assets during production. At the same time, we want to come as close as possible to a one-piece-flow and a takt-time which is equal to the customers takt time. One example where we use our MDP in this context, is for capacity balancing. In the DI factory network, we have a lot of electronic plants with corresponding surface mounted technology production lines. To use this synergy, we are generating a market place where we can balance the capacity across factory boundaries.

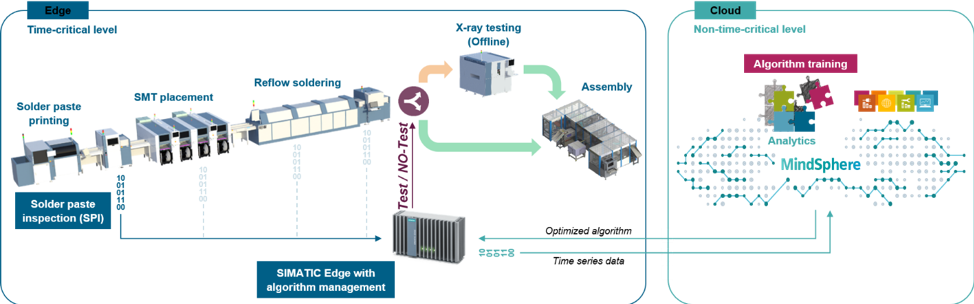

On the shop floor, autonomous guided vehicles with swarm intelligence and robot farms form a cyber physical system to organise intralogistics material supply and high flexible work arrangements. Artificial intelligence, machine learning algorithms and pattern recognition support and enable predictive maintenance, reduction of test efforts and increased machine utilisation by distributing relevant information to people via connected smart devices. In cooperation with Schmalz we implemented machine utilisation in our Amberg factory where we optimised a packaging machine using an intelligent sensor from Schmalz and Siemens software.

The data for all these scenarios must be extracted from different sources like the ERP system, “Siemens PLM system Teamcenter,” MES-level, SCADA-level, “Industrial Edge” (shop floor data), Simatic PLCs, sensors and other not yet known sources.

Knowing all the different scenarios and target states which we are aiming for, it becomes clear that traditional data warehouse architecture has its limits. The 9Vs of big data like volume, velocity, variety, veracity, value, volatility, visibility, viability and validity are now defining data. Data volume and streaming has exploded in factories due to traceability, legal regulations and quality assurance. Today, we want to analyse data from different sources: video feeds, photographs, process data, test data, log files and even text files. With this comes the challenge, which data to trust, which should be kept and which discarded? Do they need to have all the same value in units and how long is this data retained?

The Manufacturing Data Platform (MDP)